Abstract

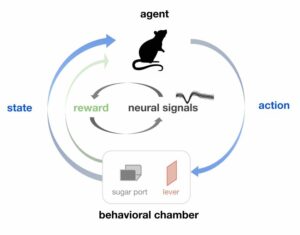

Current neural decoding methods typically aim at explaining behavior based on neural activity via supervised learning. However, since generally there is a strong connection between learning of subjects and their expectations on long-term

rewards, we hypothesize that extracting an intrinsic reward function as an intermediate step will lead to better generalization and improved decoding performance. We use inverse reinforcement learning to infer an intrinsic reward function underlying a behavior in closed form, and associate it with neural activity in an approach we call NeuRL. We study the behavior of rats in a response-preparation task and evaluate the performance of NeuRL within simulated inhibition and per-trial behavior prediction. By assigning clear functional roles to defined neuronal populations our approach offers a new interpretation tool for complex neuronal data with testable predictions. In per-trial behavior prediction, our approach furthermore improves accuracy by up to 15% compared to traditional methods.